Summary

The new 2020 iPad Pro comes with a promise to improve augmented reality (AR) thanks to its new built-in LiDAR sensor. If it can live up to the hype, the LiDAR sensor may be exactly the missing piece we need to deliver a superb AR experience—a quality of experience that, until now, has been somewhat elusive.

The quality of such LiDAR-supported AR primarily depends on how well the LiDAR sensor works with traditional hardware and with optimized software that can enhance tracking algorithms by incorporating new sensory outputs. In this report, vGIS—the leading developer of augmented reality (AR) and mixed reality (MR) solutions for field services—evaluates whether the new LiDAR sensor improves spatial tracking.

Introduction

In mid-March, Apple announced a shiny new gadget: the 2020 iPad Pro. Along with mostly evolutionary features, Apple included something completely new. A built-in LiDAR scanner is a first in tablets, and it came with a grand Apple-esque promise:

The breakthrough LiDAR Scanner enables capabilities never before possible on any mobile device. The LiDAR Scanner measures the distance to surrounding objects up to 5 meters away, works both indoors and outdoors, and operates at the photon level at nano-second speeds. New depth frameworks in iPadOS combine depth points measured by the LiDAR Scanner, data from both cameras and motion sensors, and is enhanced by computer vision algorithms on the A12Z Bionic for a more detailed understanding of a scene. The tight integration of these elements enables a whole new class of AR experiences on iPad Pro.

The inevitable iFixit teardown confirmed that LiDAR is indeed the crown jewel of the new iPad, even if much of the device only incrementally improves on the 2018 version of the iPad Pro.

Speculations have been rampant about how the LiDAR scanner may be offering a glimpse of Apple’s AR strategy—of its plans for using AR capabilities to improve the core user experience in revolutionary ways, possible iPhone 12 features, and possible features of the upcoming Apple Glasses. vGIS is looking at this story from a slightly different angle.

But, first, what is LiDAR?

The technology works by illuminating a target with lasers beams and measuring the reflected light with a sensor in order to calculate the distance to the target. LiDAR is not a new or unconventional concept. We are surrounded by LiDAR scanners. In everything from self-driving cars to surveying equipment, LiDAR is deployed to help us capture and understand the properties of the world. Apple’s own iPhone uses a form of LiDAR to improve facial recognition. However, given how prominently Apple is now featuring the AR-assisting capability of LiDAR, the company may be signaling that it has much bigger plans for the technology.

Traditional AR relies mainly on processing live video feed captured by the cameras of a device. The technology is not perfect; the live video processing may fall prey to misinterpretations and unfavorable light conditions like dim light or bright light. But LiDAR emits its own light and may offer superior spatial tracking. Under normal conditions, LiDAR—or so the theory goes—may identify distinct features much more clearly than purely optical tracking can, and then use those features to anchor the augmented reality in space. Although AR may malfunction or stop working altogether in difficult light conditions, in the same conditions LiDAR may keep the system stable by providing additional information.

In its press release, Apple noted that its LiDAR “works both indoors and outdoors,” suggesting that Apple may have figured out how to use LiDAR to improve spatial tracking. For vGIS, this is a capability of utmost importance, since accurate spatial tracking would dramatically improve the user’s experience. If Apple has indeed accomplished this, the feature would enable consumer-grade devices to provide unprecedented accuracy.

Early reports have suggested that with no compelling software yet available to exploit the new hardware features, iPad’s LiDAR is a solution in search of a problem. Perhaps Apple plans to help third-party developers improve the AR experience? We set out to check this hypothesis.

Methodology

Our objective was to measure the relative performance of the new iPad’s LiDAR sensor and to determine whether it improves positional tracking.

We made the comparison using the vGIS system without an external GNSS. This way, the devices had to rely entirely on AR for positioning.

The main test groups included Team Android, represented by two of the best (almost) devices that Android has to offer, Samsung Galaxy S10 and Samsung Galaxy Tab S6; and Team Apple, represented by the latest hardware in the iOS lineup, iPhone 11 and 2020 iPad Pro. For the sake of comparison, we also added Microsoft HoloLens 2 to the roster. But we included it only for benchmarking purposes, since HoloLens with its multi-camera array is in a league of its own.

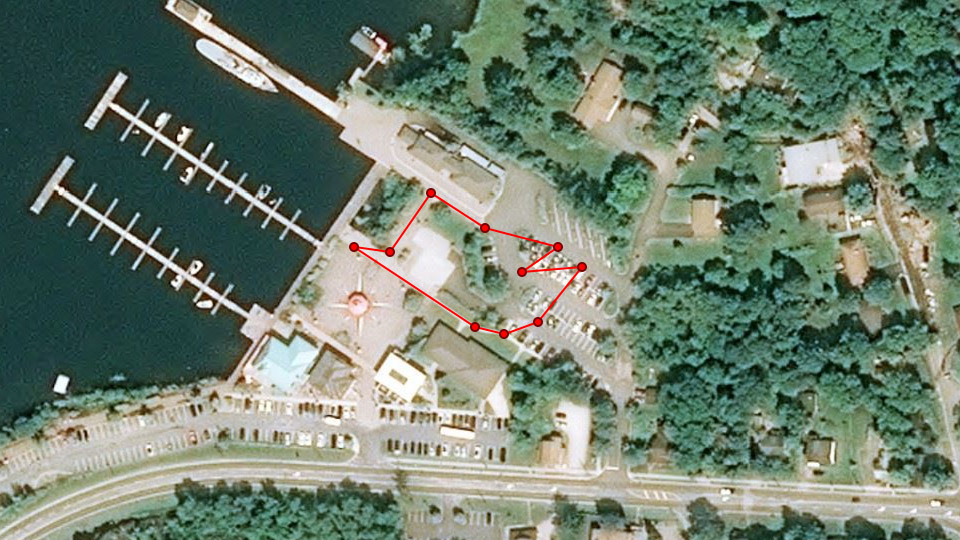

We prepared a course that is representative of a typical locate job: a mixture of areas with flat surfaces that have few distinguishing features, like parking lots and sidewalks; and areas that have well-defined features like curbs, hedges, and barriers. The course was about 250 meters long (820 feet) and included a few sharp turns, i.e., turns greater than 90 degrees.

Leica GG04 plus was used to validate the milestones.

For each test, we affixed two devices to each other to ensure that their performance would be unaffected by shaking or other uneven movement.

At the end of each test, we recorded the performance of each device as measured by the deviation of the final position in AR from the milestone marker.

The tests were performed in three environments: brightly lit, overcast, and twilight or dark.

Our main hypothesis was that the LiDAR would offer noticeably better tracking capabilities for the iPad, especially under difficult light conditions. So how did it do?

Results

When planning this test, we thought we would get a clear this-better-than-that picture. But the results were much more nuanced than we had expected.

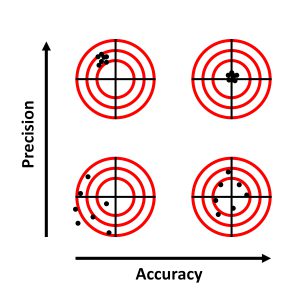

Accuracy versus Precision

When discussing positioning, the terms “accuracy” and “precision” are often used interchangeably. But they name two distinct concepts. “Accuracy” refers to how close to the target the readings fall, whereas “precision” refers to how tightly readings are grouped together.

The graphic below illustrates the difference.

The data from the tests are provided below in meters. For each test and device, outliers were discarded (the 5% of the readings that were most extreme).

Accuracy was determined by taking the arithmetic mean of all results. Each result is a calculation of the distance between the final AR position and the milestone marker. The smaller the number, the higher the accuracy.

Precision was determined by grouping the results. It is shown by the diameter of the cluster of final AR positions. The smaller the number, the higher the precision.

| Daylight | Overcast | Twilight or Darkness | ||||

| Device | Accuracy | Precision | Accuracy | Precision | Accuracy | Precision |

| iPhone 11 | 1.1 | 0.5 | 1.1 | 0.6 | 2 | 1.2 |

| 2020 iPad Pro | 1.2 | 0.4 | 1.2 | 0.4 | 2 | 1.2 |

| Samsung Galaxy S10 | 0.6 | 1.2 | 0.5 | 1.3 | 2.8 | 3 |

| Samsung Galaxy Tab S6 | 1.0 | 2.3 | 1.1 | 2 | 4 | 6.8 |

| HoloLens 2 | 0.2 | 0.1 | 0.2 | 0.1 | N/A | N/A |

Accuracy

System accuracy varied from test to test. In about 70% to 75% of all cases, Android provided higher accuracy than the iOS devices, ending up closer to the finish marker.

However, when Android was off, it was off by a higher margin than iOS. In two instances, at the end of the round trip, Galaxy Tab S6 finished 3.5 meters (11.5 feet) from the destination.

Surprisingly, both the iPad and the iPhone exhibited a nearly identical bias. Consistently, in test after test, the iPad and iPhone ended up in the same place, about 1.2 meters (4 feet) away from the check point at the end of a trip of 250 meters (820 feet). The behavior suggested that both devices used the same processing algorithm, an algorithm that worked with remarkable consistency.

Precision

iOS devices were noticeably more precise. Most final positions of both the iPad and the iPhone were within a range of 40 centimeters (1.3 feet). We didn’t notice any major outliers, and time after time we found the finish line (or finish point) to be in approximately the same position.

Although iOS devices behaved like clones of each other, Android devices rarely finished in the same spot. Even when closer to the target, Android devices sometimes undershot, sometimes overshot the destination. In two instances, Galaxy Tab S6 ended up 3.5 meters (11.5 feet) away from the destination—for no apparent reason. In the Android-only camp, though, the Galaxy S10 was substantially more precise than the Galaxy Tab S6.

Error Correction

Typically, optical tracking relies on the ability of devices to establish spatial anchors or waypoints. As the device recognizes the area where it has been before, it matches the alignment of AR overlays to the spatial anchors that it is tracking.

The test distance of 250 meters (820 feet) seemed to push the limits of what the typical device was designed to handle. Despite the challenge, both Android devices performed well during the test, recognizing the starting point accurately and correcting the drift accumulated. In some instances, it took up to 4 seconds for the devices to recognize and correct the drift, but they did so. We encountered no apparent false positives—cases in which the device would incorrectly identify the area as something it remembered from before—during this round of testing. However, false positives are not uncommon for Android devices.

On the other hand, neither the iPad nor the iPhone applied drift correction at the end of the walk. This behavior suggests that iOS (and ARKit) either does not use session-wide spatial anchors or, more likely, releases them from memory faster than Android does.

Quality of AR Experience

The side-by-side nature of our comparison revealed the differences in the quality of AR user experience. Although the differences may not be perceptible outside the test environment, during testing they were quite significant.

The iPad and iPhone provided a noticeably smoother AR experience. AR view on iOS devices seemed more fluid and responsive. Indeed, it was almost as smooth as operating the device’s native camera app.

In contrast, the Galaxy S10 and Galaxy Tab S6 felt sluggish after a walk of 50 meters (164 feet), with frames occasionally freezing and skipping, as if the hardware were struggling to process the AR.

Combined with the absence of error correction in iOS devices, the jittery AR experience on Android made us wonder whether ARCore (Android) does a better job than ARKit (iOS) in setting and keeping spatial anchors. At the same time, though, the better sensor and optical feed processing of ARKit compensates for the lack of long-term spatial memory, resulting in more precise and predictable results for the iOS devices.

LiDAR Test: iPad versus iPhone

We found very little difference between the 2020 iPad Pro and the iPhone 11. In many cases, both devices ended up within 30 centimeters (1 foot) or less of each other at the end of each trial run. The similarity of these results suggests that Apple has not yet incorporated LiDAR into their spatial-tracking algorithms, instead still relying on the same spatial tracking already included in other iOS devices.

Dim Light

Testing in dim light revealed drastic differences in performance. As the sun went down and darkness began to fall, the dim light caused Android cameras to lose focus, resulting in agonizingly frequent drift. Eventually, both the Galaxy S10 and Galaxy Tab S6 gave up and stopped tracking their surroundings. The AR visuals just stayed stuck on the screen like a fly on a windshield.

But the iOS devices soldiered on. As long as there were any vertical surfaces and any light to work with, the iPad and iPhone kept working. The performance of iOS devices at night was nothing short of remarkable. Even when the screen was pitch-black, both the iPad and the iPhone managed to track their surroundings.

The situation changed when we reached open spaces. As soon as the iOS devices arrived in the open parking lot, tracking quality declined and became only marginally better than that of the Galaxy S10.

Presumably, LiDAR should have enabled better nighttime performance, since the LiDAR sensor doesn’t rely on visible light. This hasn’t been the case in our tests. The iPhone performed admirably well, and over the course of our testing we observed no noticeable differences between the performance of the new iPad Pro and the performance of the iPhone.

HoloLens

As demonstrated by several studies, the Microsoft HoloLens and Microsoft HoloLens 2 provide superior tracking thanks to their multicamera arrays. Since the camera arrangement and spatial tracking of the HoloLens differ greatly from those of Android and iOS devices, we used Microsoft HoloLens 2 only for benchmarking purposes, just to see how well it does.

Over shorter distances, the HoloLens 2 did not suffer from any noticeable drift—none at all. Longer walks did cause the HoloLens to drift slightly, especially when challenged by the flatness of the parking lot. However, it quickly recovered once it encountered more familiar environments. We confirmed that with respect to accuracy, precision, and ability to correct drift, HoloLens 2 is far superior to both Android and iOS.

The HoloLens 2 proved to be less sensitive to poor light conditions than the Android devices. But in near-complete darkness, it could not match the performances of the iPad and the iPhone.

Unexpected Findings

Our testing of LiDAR suggested that Apple is using AR to help LiDAR, not the other way around. We used the LiDAR scanner in dim and dark areas to create a 3D mesh. Whenever AR tracking hit a snag because very little light was entering the camera, the LiDAR scanning glitched; it resumed scanning only when optical tracking had returned to normal. Apparently, then, LiDAR is dependent on AR and disengages when AR tracking is suboptimal. If this is conclusion is correct, it is disappointing. We had expected LiDAR to add an additional source of information that could improve the device’s ability to track in poor light conditions.

Another discovery was the superiority of the iPhone’s tracking to that of Android devices (and previous generations of iPhones) in dark environments with vertical surfaces. Even with all of the cameras completely covered, the iPhone continued to accurately project AR visuals for some time. There was more uncertainty in its tracking, but the tracking continued to work. It looks as if Apple managed either to squeeze more accurate hardware into the iPhone or to much more efficiently use its internal sensors to produce readings than Android can. Under the same conditions, Android devices simply froze. Even when cameras were only partially covered, Android-based AR drifted or froze. This behavior suggests that Android relies mostly on optical tracking, whereas iOS engages a wide variety of sensors to keep AR stable.

Finally, the AR performance of the Galaxy Tab S6 was worse than that of all other devices in our comparison. The tablet couldn’t keep up with its smaller and older cousin, the Galaxy S10, and it lagged behind the iOS devices in precision and user experience. Of the devices we tested, the Tab S6 was by far the least accurate and least precise. Moreover, in challenging light conditions, its camera quickly went out of focus, and the focus was impossible to restore.

Conclusions

For countless industries, LiDAR scanners have turned dreams into reality. Driverless cars owe many of their capabilities to these light-emitting sensors. With the help of hardware OEMs, software developers, and the companies developing AR frameworks, LiDAR technology may revolutionize the AR world as well.

However, the current implementation of the LiDAR scanner embedded in the 2020 iPad Pro falls short of expectations. It may offer some improvements in surface scanning and distance detection. But with respect to spatial tracking, the LiDAR appears to add little or no value. Perhaps Apple has not yet fully utilized the LiDAR sensor and has not yet fully exploited the capabilities of the new hardware. On the other hand, perhaps LiDAR is simply unable to improve position tracking, so that doing so requires an alternative technology.

The iPad’s AR experience is geared to work best in indoor environments with consistent lighting, vertical surfaces, and short travel distances around the play area. Yet as a hardware platform, the iPad offers many advantages for outdoor tasks as well, and its inability to make use of LiDAR outputs to improve spatial tracking looks like a missed opportunity.

Distance tracking seems to be done more accurately by the iPhone and the iPad than by Android devices. During our tests, we found that iOS visuals were more trustworthy than Android’s when we were walking away from the initial calibration point. If a job requires walking longer distances, the iPhone and the iPad are a better choice. But in localized environments like intersections, we would expect Android devices to do a better job than iOS by “remembering” their surroundings and thereby correcting any positioning drift.

In most situations, Android phones—specifically, the Samsung Galaxy S10 used in our tests and the Samsung Note 10 (which we did not test in this comparison)—perform similarly to the 2020 iPad. The iPhone 11 didn’t perform very differently from the iPad either with near identical daytime and nighttime performance.

The 2020 iPad Pro offers the largest screen in the tablet market. Given its smooth and refined AR experience, the prospect of buying it is compelling if you are looking for a new device to run your AR apps. However, if you are looking to replace an existing device, the LiDAR doesn’t bring noticeable benefits and the 2020 iPad doesn’t offer any other notable features to justify the upgrade.